A guide on PySpark Window Functions with Partition By

2022-02-17

Bulk Boto3 (bulkboto3): Python package for fast and parallel transferring a bulk of files to S3 based on boto3!

2022-03-28This post will provide you with an introduction to the Jacobian matrix and the Hessian matrix, including their definitions and methods for calculation. Additionally, the significance of the Jacobian determinant will be discussed. Lastly, the practical applications of these matrix types will be explored.

Table of Contents

What is the Jacobian matrix?

Example of the Jacobian matrix

Practice problems on finding the Jacobian matrix

Jacobian matrix determinant

The Jacobian and the invertibility of a function

Applications of the Jacobian matrix

What is the Hessian matrix?

Hessian matrix example

Practice problems on finding the Hessian matrix

Applications of the Hessian matrix

Bordered Hessian matrix

What is the Jacobian matrix?

The definition of the Jacobian matrix is as follows:

The Jacobian matrix is a matrix composed of the first-order partial derivatives of a multivariable function.

The formula for the Jacobian matrix is the following:

Therefore, Jacobian matrices will always have as many rows as vector components and the number of columns will match the number of variables

of the function.

As a curiosity, the Jacobian matrix was named after Carl Gustav Jacobi, an important 19th-century mathematician, and professor who made important contributions to mathematics, in particular to the field of linear algebra.

Example of the Jacobian matrix

Having seen the meaning of the Jacobian matrix, we are going to see step by step how to compute the Jacobian matrix of a multivariable function.

- Find the Jacobian matrix at the point (1,2) of the following function:

First of all, we calculate all the first-order partial derivatives of the function:

Now we apply the formula of the Jacobian matrix. In this case, the function has two variables and two vector components, so the Jacobian matrix will be a 2×2 square matrix:

Once we have found the expression of the Jacobian matrix, we evaluate it at point (1,2):

And finally, we perform the operations:

Once you have seen how to find the Jacobian matrix of a function, you can practice with several exercises solved step by step.

Examples:

Problem 1

Compute the Jacobian matrix at the point (0, -2) of the following vector-valued function with 2 variables:

Solution

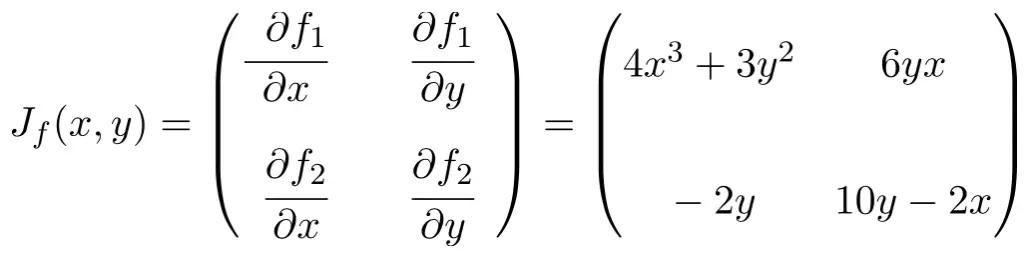

The function has two variables and two vector components so the Jacobian matrix will be a square matrix of order 2:

Once we have calculated the expression of the Jacobian matrix, we evaluate it at the point (0, -2):

And finally, we perform all the calculations:

Problem 2

Calculate the Jacobian matrix of the following 2-variable function at the point (2, -1):

Solution

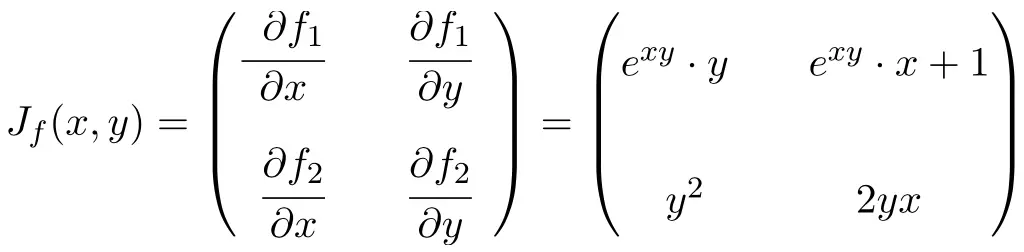

First, we apply the formula of the Jacobian matrix:

Then we evaluate the Jacobian matrix at the point (2, -1):

So the solution to the problem is:

Problem 3

Determine the Jacobian matrix at the point (2, -2,2) of the following function with 3 variables:

Solution

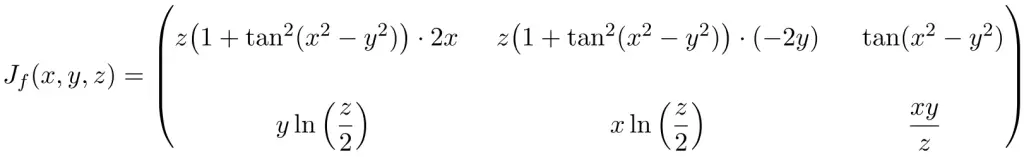

In this case, the function has three variables and two scalar functions, therefore, the Jacobian matrix will be a rectangular 2×3 dimension matrix:

Once we have the Jacobian matrix of the multivariable function, we evaluate it at the point (2, -2,2):

We perform all the calculations:

Problem 4

Find the Jacobian matrix of the following multivariable function at the point (π, π):

Solution

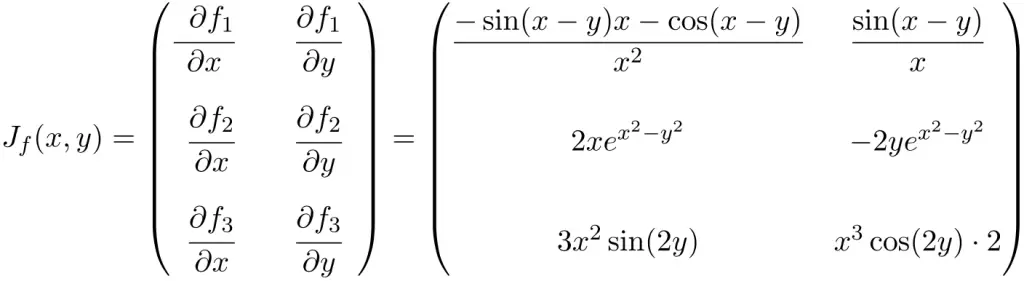

In this case, the function has two variables and vector components, therefore, the Jacobian matrix will be a rectangular matrix of size 3×2:

Secondly, we evaluate the Jacobian matrix at the point (π, π):

We compute all the operations:

So the Jacobian matrix of the vector-valued function at this point is:

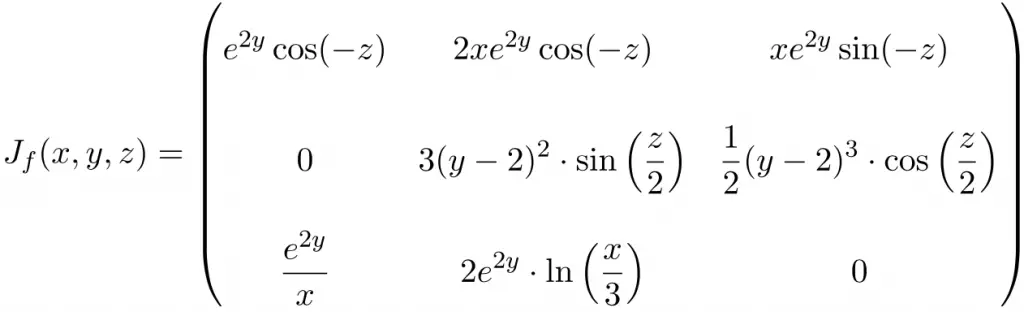

Problem 5

Calculate the Jacobian matrix at the point (3,0,π) of the following function with 3 variables:

Solution

In this case, the function has three variables and three vector components, therefore, the Jacobian matrix will be a 3×3 square matrix:

Once we have found the Jacobian matrix, we evaluate it at the point (3,0,π):

We calculate all the operations:

And the result of the Jacobian matrix is:

Jacobian matrix determinant

The determinant of the Jacobian matrix is called the Jacobian determinant, or simply the Jacobian. Note that the Jacobian determinant can only be calculated if the function has the same number of variables as vector components since then the Jacobian matrix is a square matrix.

Jacobian determinant example

Let’s see an example of how to calculate the Jacobian determinant of a function with two variables:

First, we calculate the Jacobian matrix of the function:

And now we take the determinant of the 2×2 matrix:

The Jacobian and the invertibility of a function

Now that you have seen the concept of the determinant of the Jacobian matrix, you may be wondering… what is it for?

Well, the Jacobian is used to determine whether a function can be inverted. The inverse function theorem states that if the Jacobian is nonzero, this function is invertible.

Note that this condition is necessary but not sufficient, that is, if the determinant is different from zero we can say that the matrix can be inverted, however, if the determinant is equal to 0 we don’t know whether the function has an inverse or not.

For example, in the example seen before, the determinant Jacobian results in In that case we can affirm that the function can always be inverted except at the point (0,0), because this point is the only one in which the Jacobian determinant is equal to zero and, therefore, we do not know whether the inverse function exists in this point.

Applications of the Jacobian matrix

In addition to the utility that we have seen of the Jacobian, which determines whether a function is invertible, the Jacobian matrix has other applications.

The Jacobian matrix is used to calculate the critical points of a multivariate function, which are then classified into maximums, minimums, or saddle points using the Hessian matrix. To find the critical points, you have to calculate the Jacobian matrix of the function, set it equal to 0, and solve the resulting equations.

Moreover, another application of the Jacobian matrix is found in the integration of functions with more than one variable, that is, in double, and triple integrals, etc. Since the determinant of the Jacobian matrix allows a change of variable in multiple integrals according to the following formula:

Where T is the variable change function that relates the original variables to the new ones.

Finally, the Jacobian matrix can also be used to compute a linear approximation of any function around a point

Hessian matrix

What is the Hessian matrix?

The definition of the Hessian matrix is as follows:

The Hessian matrix, or simply Hessian, is an n×n square matrix composed of the second-order partial derivatives of a function of n variables.

The Hessian matrix was named after Ludwig Otto Hesse, a 19th-century German mathematician who made very important contributions to the field of linear algebra.

Thus, the formula for the Hessian matrix is as follows:

Therefore, the Hessian matrix will always be a square matrix whose dimension will be equal to the number of variables of the function. For example, if the function has 3 variables, the Hessian matrix will be a 3×3 dimension matrix.

Furthermore, Schwarz’s theorem (or Clairaut’s theorem) states that the order of differentiation does not matter, that is, first partially differentiate with respect to the variable and then with respect to the variable

is the same as first partially differentiating with respect to

and then with respect to

In other words, the Hessian matrix is a symmetric matrix.

Thus, the Hessian matrix is the matrix with the second-order partial derivatives of a function. On the other hand, the matrix with the first-order partial derivatives of a function is the Jacobian matrix.

Hessian matrix example

Once we have seen how to calculate the Hessian matrix, let’s see an example to fully understand the concept:

- Calculate the Hessian matrix at the point (1,0) of the following multivariable function:

First of all, we have to compute the first-order partial derivatives of the function:

Once we know the first derivatives, we calculate all the second-order partial derivatives of the function:

Now we can find the Hessian matrix using the formula for 2×2 matrices:

So the Hessian matrix evaluated at the point (1,0) is:

Practice problems on finding the Hessian matrix

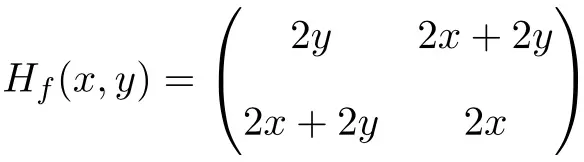

Problem 1

Find the Hessian matrix of the following 2 variable function at point (1,1):

Solution

First, we compute the first-order partial derivatives of the function:

Then we calculate all the second-order partial derivatives of the function:

So the Hessian matrix is defined as follows:

Finally, we evaluate the Hessian matrix at point (1,1):

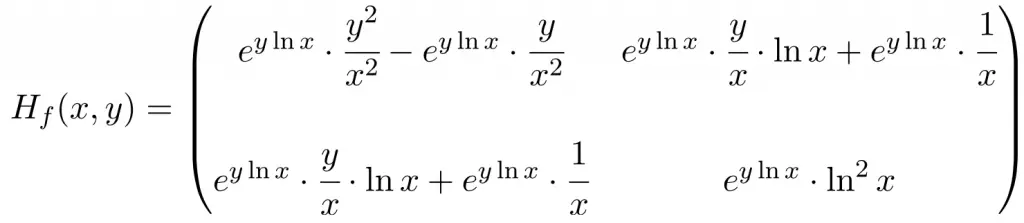

Problem 2

Calculate the Hessian matrix at the point (1,1) of the following function with two variables:

Solution

First, we differentiate the function with respect to x and with respect to y:

Once we have the first derivatives, we calculate the second-order partial derivatives of the function:

So the Hessian matrix of the function is a square matrix of order 2:

And we evaluate the Hessian matrix at point (1,1):

Problem 3

Compute the Hessian matrix at the point (0,1,π) of the following 3 variable function:

Solution

To compute the Hessian matrix first we have to calculate the first-order partial derivatives of the function:

Once we have computed the first derivatives, we calculate the second-order partial derivatives of the function:

So the Hessian matrix of the function is a square matrix of order 3:

And finally, we substitute the variables for their respective values at the point (0,1,π):

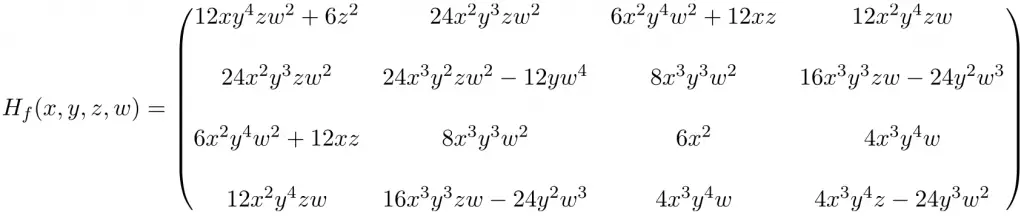

Problem 4

Determine the Hessian matrix at the point (2, -1, 1, -1) of the following function with 4 variables:

Solution

The first step is to find the first-order partial derivatives of the function:

Now we solve the second-order partial derivatives of the function:

So the expression of the 4×4 Hessian matrix obtained by solving all the partial derivatives is the following:

And finally, we substitute the unknowns for their respective values of the point (2, -1, 1, -1) and perform the calculations:

Applications of the Hessian matrix

You may be wondering… what is the Hessian matrix for? Well, the Hessian matrix has several applications in mathematics. Next, we will see what the Hessian matrix is used for.

Minimum, maximum, or saddle point

If the gradient of a function is zero at some point, that is f(x)=0, then function f has a critical point at x. In this regard, we can determine whether that critical point is a local minimum, a local maximum, or a saddle point using the Hessian matrix:

- If the Hessian matrix is positive definite (all the eigenvalues of the Hessian matrix are positive), the critical point is a local minimum of the function.

- If the Hessian matrix is negative definite (all the eigenvalues of the Hessian matrix are negative), the critical point is a local maximum of the function.

- If the Hessian matrix is indefinite (the Hessian matrix has positive and negative eigenvalues), the critical point is a saddle point.

Note that if an eigenvalue of the Hessian matrix is 0, we cannot know whether the critical point is an extremum or a saddle point.

Convexity or concavity

Another utility of the Hessian matrix is to know whether a function is concave or convex. And this can be determined by applying the following theorem.

Let be an open set and

a function whose second derivatives are continuous, its concavity or convexity is defined by the Hessian matrix:

- Function f is convex on set A if, and only if, its Hessian matrix is positive semidefinite at all points on the set.

- Function f is strictly convex on set A if, and only if, its Hessian matrix is positive definite at all points on the set.

- Function f is concave on set A if, and only if, its Hessian matrix is negative semi-definite at all points on the set.

- Function f is strictly concave on set A if, and only if, its Hessian matrix is negative definite at all points on the set.

Taylor polynomial

The expansion of the Taylor polynomial for functions of 2 or more variables at the point begins as follows:

As you can see, the second-order terms of the Taylor expansion are given by the Hessian matrix evaluated at point This application of the Hessian matrix is very useful in large optimization problems.

Bordered Hessian matrix

Another use of the Hessian matrix is to calculate the minimum and maximum of a multivariate function restricted to another function

To solve this problem, we use the bordered Hessian matrix, which is calculated by applying the following steps:

Step 1: Calculate the Lagrange function, which is defined by the following expression:

Step 2: Find the critical points of the Lagrange function. To do this, we calculate the gradient of the Lagrange function, set the equations equal to 0, and solve the equations.

Step 3: For each point found, calculate the bordered Hessian matrix, which is defined by the following formula:

Step 4: Determine for each critical point whether it is a maximum or a minimum:

- The critical point will be a local maximum of function

under the restrictions of function

if the last n-m (where n is the number of variables and m is the number of constraints) major minors of the bordered Hessian matrix evaluated at the critical point have alternating signs starting with the negative sign.

- The critical point will be a local minimum of function

under the restrictions of function

if the last n-m (where n is the number of variables and m is the number of constraints) major minors of the bordered Hessian matrix evaluated at the critical point all have negative signs.

Note that the local minimums or maximums of a restricted function do not have to be that of the unrestricted function. So the bordered Hessian matrix only works for this type of problem.

Source:

https://www.algebrapracticeproblems.com/hessian-matrix/

https://www.algebrapracticeproblems.com/jacobian-matrix-determinant/